Hello Mautic Community,

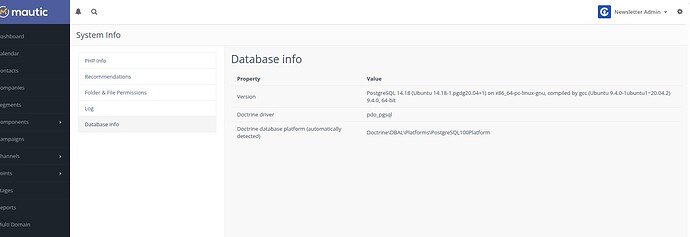

Like many of you, I’ve encountered limitations with MySQL/MariaDB databases in Mautic, such as column size restrictions and index limits per table, which have hindered my ability to scale effectively. To address this, I developed a small proof of concept (PoC) to run Mautic 4 (version 4.4.13, which I’m currently using) on PostgreSQL by converting a MySQL database. I’d like to share my approach, discuss the feasibility of restoring PostgreSQL support, and gather your feedback.

My Approach

To minimize changes to Mautic’s core codebase, I took the following steps:

-

Analyzed MySQL Dependencies: I reviewed Mautic’s core and plugin code to identify MySQL-specific features and functions. Only a few MySQL functions required porting to PostgreSQL equivalents.

-

Handled Boolean-Integer Comparisons: A significant challenge was MySQL queries comparing boolean values with integers, which are common in Mautic. Rewriting all queries would be time-intensive, so I implemented custom PostgreSQL operator functions to support these comparisons seamlessly.

-

Iterative Testing and Fixes: Using a “try each Mautic feature and fix errors” approach, I identified and resolved issues. Surprisingly, only a few code changes were needed to get Mautic running smoothly on a ported PostgreSQL database.

-

Scope Limitation: For this PoC, I focused on runtime compatibility and did not convert migration or installation files.

Current Status

My PoC demonstrates that running Mautic 4.4.13 on PostgreSQL is achievable with minimal code changes. I’m waiting for the official release of Mautic 7.0 to test and port this solution to the latest version.

Call for Discussion

I believe restoring PostgreSQL support could benefit the Mautic community, especially for users needing a more robust database to handle larger datasets or specific use cases. This PoC suggests it’s feasible with limited resources, but I’d love to hear your thoughts:

-

Are you interested in PostgreSQL support for Mautic? Why?

-

What challenges or concerns do you foresee in maintaining PostgreSQL compatibility?

-

Would you be willing to collaborate or test a PostgreSQL-compatible version?

Please note: This is a proof of concept, not official or unofficial support. I’m sharing this to gauge interest and spark discussion about reintroducing PostgreSQL as a supported database.Looking forward to your ideas and feedback!

Best regards,

Wieslaw Golec